Throughout Asimov’s stories, he poses questions of the kinds of rights robots, artificial intelligence so advanced it has developed a sense of self, should have. Should a robot be able to choose its employment? Should it be able to demand safe working conditions from its employer? If it were to commit a crime, under what capacity would it be tried? These questions may seem far-off, even ridiculous to consider, but recently, two bots proved we may face these problems sooner than we think.

Bots–in this case, a computer program scripted to perform a particular task within a set of parameters–are not in any way advanced enough to have a sense of self, anything that we’d term consciousness. They operate within the conditions their programmers set, no more and no less.

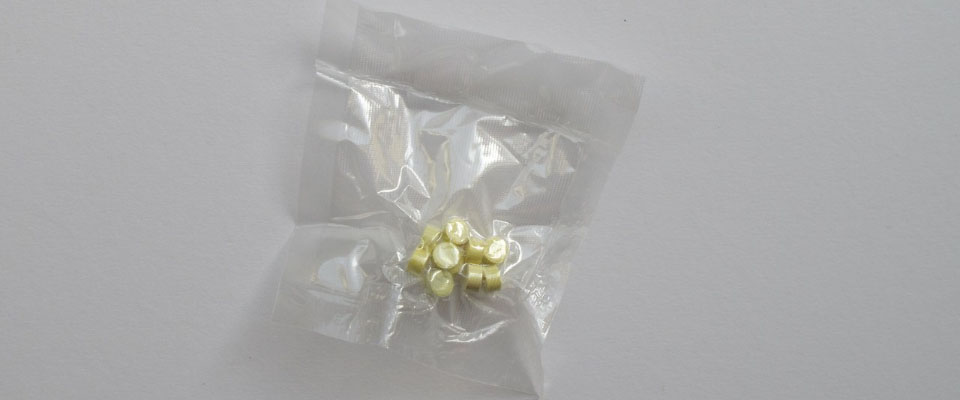

However, the darknet shopper operated in those conditions, breaking the law several times. Its task was to visit a marketplace on the darknet (aka, the Deep Web) once a week and make a random purchase with a budget of 100USD in Bitcoin. These purchases, shipped to Zurich, then became part of an exhibition about the darknet. The shopper purchased, notably, ten pills of ecstasy and a legitimate looking falsified Hungarian passport. Illegal contraband. The coders and creators of the exhibition took full responsibility for the bot’s actions.

It did, however, also buy plenty of innocuous and even amusing things, like this fake Sprite can stash. The purchases were documented at the exhibition’s blog.

More recently, another programmer apparently ran into a similar problem. His bot ran a Twitter account, posting tweets composed by taking his twitter feed and then rearranging the words to create new sequences. The bot made an unspecified death threat that appeared so legitimate it prompted police to question the programmer, technically the owner of the Twitter account. Interestingly, the threat was made in response to another bot-run Twitter account. The account has since been removed, per police request.

The randomly-generated words made one. And so they come to me.

— court jeffster (@jvdgoot) February 11, 2015

Which I think is an interesting legal angle.

— court jeffster (@jvdgoot) February 11, 2015

The question becomes, who is legally responsible for the bot’s actions? In both cases, the programmers took the responsibility, voluntarily or otherwise. However, Ryan Calo of Forbes seems to suggest that this doesn’t necessarily have to be the case in the US or based on the wording of the particular law, at least. It may rest on intent, which could be difficult to establish in the case of the darknet shopper.

That itself runs into interesting problems. Hypothetically, and I’m no legal expert by any means, if a programmer is deemed to not have recklessly allowed the law to be broken or did not intend for such to happen, but it was still broken, can the bot be said to have had an intention of breaking the law? The argument, even in this context, still seems silly. But asking these questions may have a practical side sooner than we may think.