I hear the term “artificial intelligence” in two contexts.

One is in science fiction. I love movies, books, and video games about smart robots: friendly, murderous, misunderstood, or loving robots. And they’re incredibly popular. The Wikipedia List of Artificial Intelligence Films has over forty entries. Google lists even more.

But here’s the thing: artificial intelligence is real.

People build software that can learn things. I’ve done it myself. Two summers ago, I worked on improving artificial neural networks.

An artificial neural network is a type of computer model whose setup mimics the setup of neurons in the brain.

Here’s some detail about how that might work. If you’re not interested, skip to right after the next image.

Let’s say I want my computer to recognize the letters of the alphabet from human handwriting. If I scan an image of the handwritten word CAT, the computer sees the image purely as pixels and hues. As far as the computer is concerned, the image is just a list of boxes and colors.

You and I have no problem looking at different arrangements of ink on a page and knowing that they’re all the letter C. It can be black, blue, squashed, or stretched-out, but you know a C when you see one. People don’t get confused because somebody drew the letter a bit taller than last time.

In contrast, a computer doesn’t naturally put pixels together into words. It has to learn how to do that, and it’s not easy.

I could tell my computer that a particular image says “CAT,” but all that teaches it is that some exact arrangement of pixels corresponds to “CAT.” Another written CAT will have a different arrangement of pixels. So the next time I want to scan a written word, I’m right back where I started. My computer will see the new set of pixels and be clueless. What I want, instead, is a model that can recognize the letters C, A, and T, no matter who wrote them.

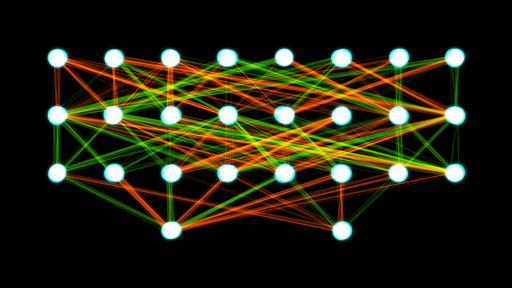

An artificial neural network is one way to do this. Conceptually, what I do is set up some “nodes”– those brightly colored circles in the picture– and connections between those nodes. I feed my neural network an image, like a handwritten C. That input travels through the connections between nodes, according to some predetermined mathematical functions. Based on all of that, the neural network makes a guess about what that letter is.

If it’s right, great. If it’s wrong, the network “learns from its mistake.” The information that the guess was wrong helps the neural network improve itself. After a wrong guess is made, the strengths of the network’s connections update a little. Some connections become stronger, and others become weaker. The changes are made so that the neural network is closer to correctly guessing that C. That way, the guess is more likely to be correct next time.

The actual learning process isn’t exactly step-by-step the way I’m describing it, but it draws on those principles.

Detailed Wikipedia article on the subject.

If I set up my neural network just right, I can feed it, say, three million handwriting samples and end up with a computer that can recognize letters from anyone’s handwriting.

Artificial neural networks are just one type of machine learning. Machine learning algorithms are behind Facebook’s facial recognition software and Google’s self-driving cars. They are ways computers learn things without having the skills or information directly programmed in.

The system I built in my summer project wasn’t witty or power-hungry. Nor are the ones used every day in modern technology.

But these systems are getting smarter. And the concern is that they might get dangerous.

There’s a detailed analysis of this subject on Wait But Why. I’m hoping to discuss it in a future post.